Strategic AI in Content Moderation

There is tremendous load of NSFW (Not Safe for Work) content available on digital platforms which makes it hard for both content management and user safety. AI has become an important mechanism for identifying and preventing the dissemination of such content, with the ability to provide scalable and effective solutions where traditional methodologies lack.

Advanced Detection Techniques

AI systems use sophisticated algorithms like deep learning and convolutional neural networks (CNNs) to analyze and identify NSFW content. These systems are trained on large amounts of data, usually tens of thousands to millions of images, and text examples, which means that they can identify specific patterns with very high accuracy. For example, companies like leading tech companies report that their AI models can detect explicit content with precision rates between 88% and 95%, which largely reduces the human effort involved in content moderation efforts.

Automation & Real-Time Processing PrestaShop Core Editorial Staff2021-08-26T11:10:45-07:00

Being able to work in real-time is one of the key benefits of AI in this scenario. AI tools immediately scan each piece of uploaded content for NSFW elements as it goes up, and lightning-fast decisions are made about the appropriateness of each. The ability of real-time processing is quite useful for social networks and websites, with high traffic uploads, like social media, news, or forums, helping to prevent inappropriate material was found and eliminated before hitting huge public data.

Additionally, Enriching User Experience and Security

The high-precision AI for NSFW filtering graphical content does not only protect users, especially minors, from inappropriate content exposure but also enriches the user experience as well. But, to me, the platforms that handle content the best are the most likely to keep users (er) and to keep a positive community environment. TD: And AI, when it enforces rules (for avoiding harmful content), it does it repeatedly and this inevitably builds trust over time between platform and user that the platform is very serious about keeping users safe.

Challenges in Implementation

Of course, even with the power of AI comes the complexity of applying AI to the prevention of NSFW content dissemination. But where this leaves us when it comes to discerning appropriate content from inappropriate remains tricky. Take, for example, educational content that features non-sexual nudity for scientific or medical reasons, which an AI system may mistakenly read as sexual.

The Role of User Feedback

Including feedback from the users is essential to improve the accuracy of content moderation systems. Through such feedback mechanisms, users can alert to AI moderation errors, so that AI learns live and gains more real-world experience in understanding content nuances. This feedback loop is crucial to the ongoing improvement of AI tools, making them better and better at processing a wide range of content types in respectful, accurate ways.

This Week: The Future of AI

The advances of AI technology in preventing the sharing of NSFW content are assumed to increase even further with time. For example, it can expand its semantic search over time, learning from similar concepts and applying better context and content analysis in future. This will inturn allow more accurate NSFW content filtering, with less false positives & negatives, which makes onlenife darwin places to be.

Intangible and inherently valuable MOSCOW - Much like AI, content moderation has a strategic place in the future of our digital landscape; but from here on out, a safer and more secure Internet is born- concurrently with the AI age that drives it.

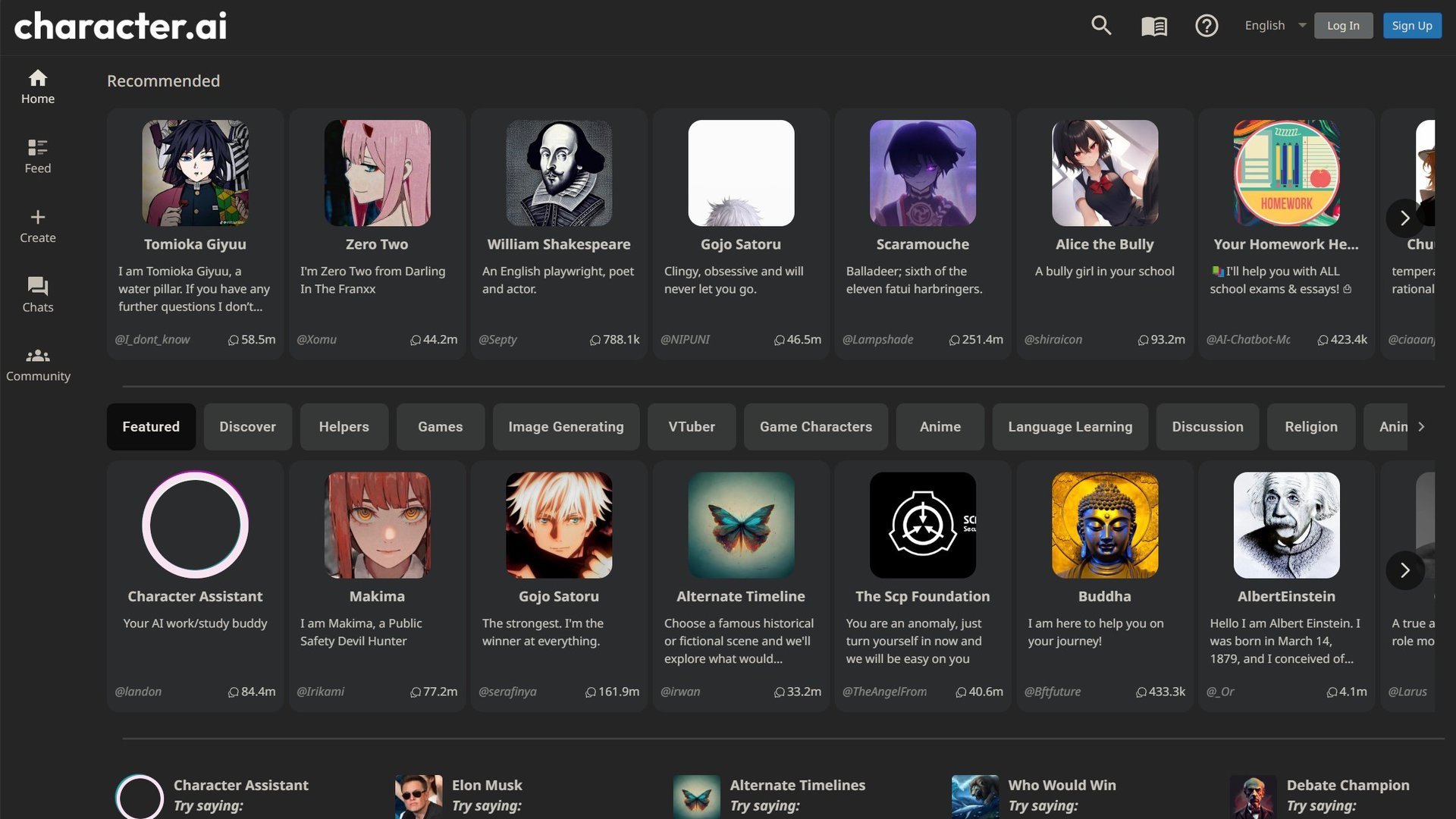

Read more at nsfw character ai for a more in-depth analysis of AI in adult content moderation tech.